Article written by Ana-Maria Ilea

Index

Keywords

Abstract

Introduction

Problem

Solutions

Conclusion

References

Keywords

Xsens, Motion Capture, Gameplay, Runtime, Unreal Engine, VR, Camera, Position, Offset, Root Motion, Live Link

Abstract

Motion capture stood out by using the technology as live input. The MVN Live Link plugin for Unreal Engine integrates the functionality of the Xsens suit and applies the data to a Skeletal Mesh. The way, the player character uses accurate movement from the user. While the motion is realistic, neither the plugin nor the Xsens software have an accurate world position. Because of this, the Skeletal Mesh in a blueprint will always have an offset and any position change of the actor will have undesired results. This poses an issue for any VR integration which must work with the Xsens suit and any game in which the player is not static. This article focuses on proposing potential fixes to minimize the offset received by the Xsens software.

Introduction

The team is composed of eight fourth-year students: Anca Bancila – project management, Viktoria Prodanova – design and production, Victoria Beliska – art, Johan Wittmers – art and technical art, Ivan Ivanov – art and VFX, Bram Reuling – programming, Pepijn Wasser – programming and Ana-Maria Ilea – programming.

The client is Movella. Their mission is to bring meaning to movement in the areas of entertainment, athletics, health, and the industrial market some sort of significance. To create intelligent and creative solutions for sensing and capturing motion, Movella brings together experts in the field (Movella | Bringing Meaning To Movement, n.d.).

The project challenged the team to create a prototype of a full-body motion capture game which can be played on the screen and in VR, made in Unreal Engine 5. The game would use the Xsens suit and technology to capture the movement of the user. In this sense, the suit would become a player controller, sending input data to the game. The data holds information about the location and rotation of every bone in a skeleton. The team soon discovered that the suit sends data to the Skeletal Mesh of a character, giving it an offset whenever the user moved in real life. Furthermore, the system does not have an accurate world position to send to the Skeletal Mesh. Because of this, the program cannot keep track of the position of the user in real life. If the user moves, the distance would not be accurate and the world position of the player character would not reliable. This poses an issue for the development of any game in which the player is not static.

Problem

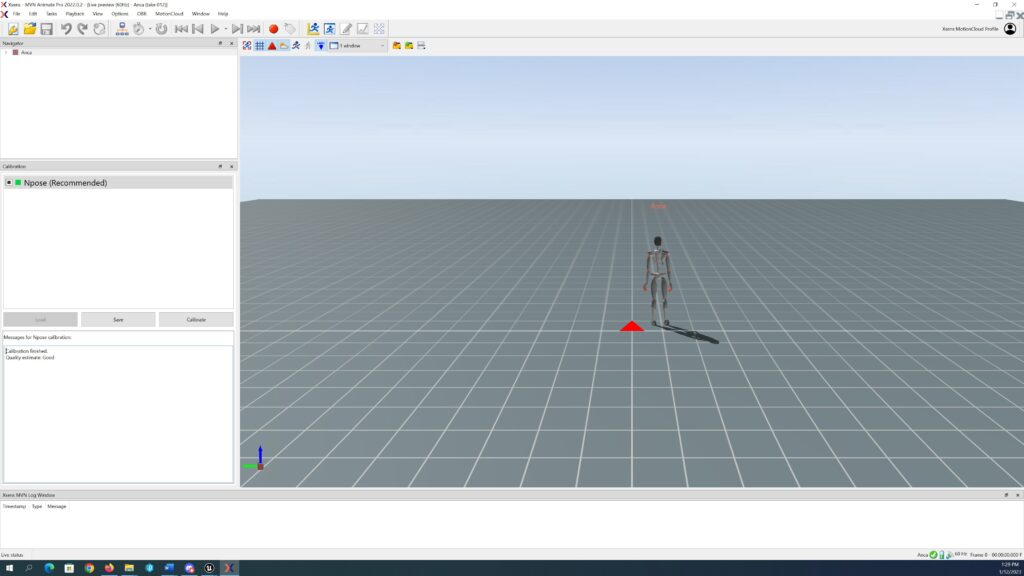

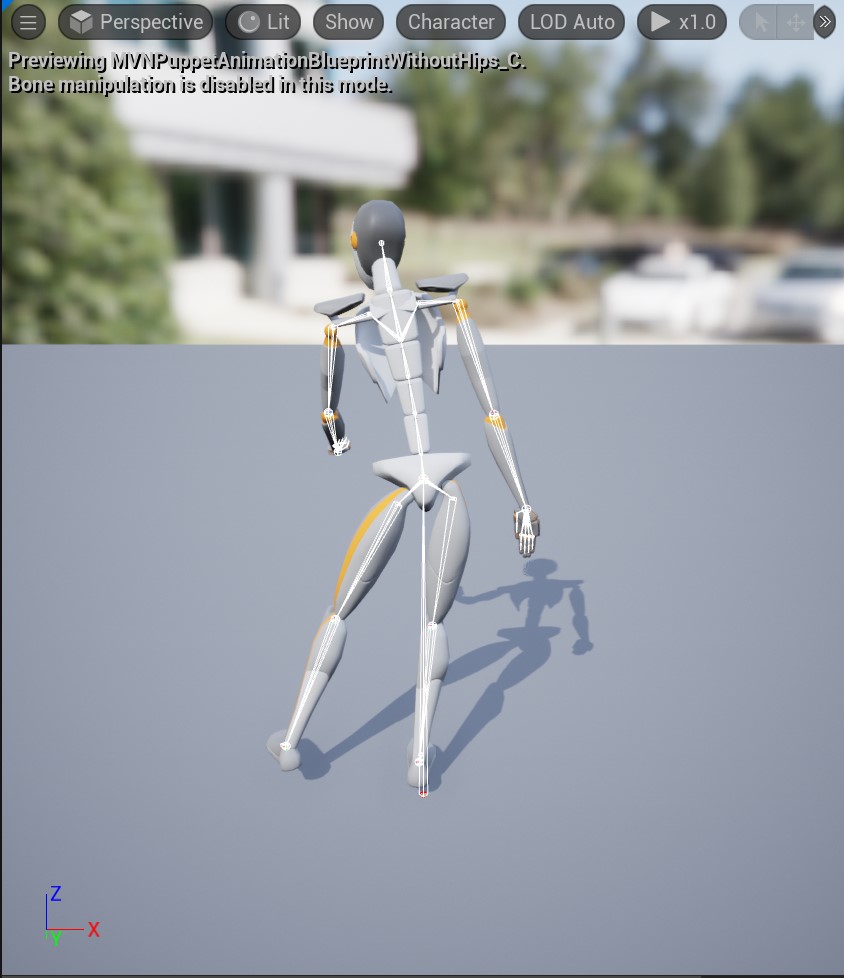

MVN Animate Pro takes the height and the foot length of the person wearing the suit. Based on these values, the program can place a character in its environment. The character will be placed around the origin of the system, though it can still contain a small offset (Figure 1). This offset will be sent to Unreal Engine as well, making the Skeletal Mesh of the player character move as well.

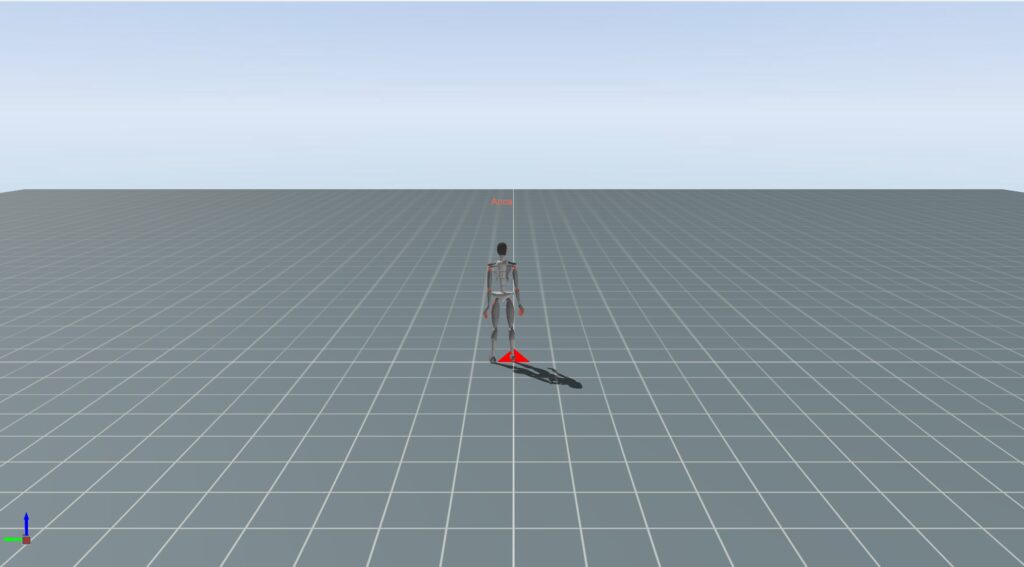

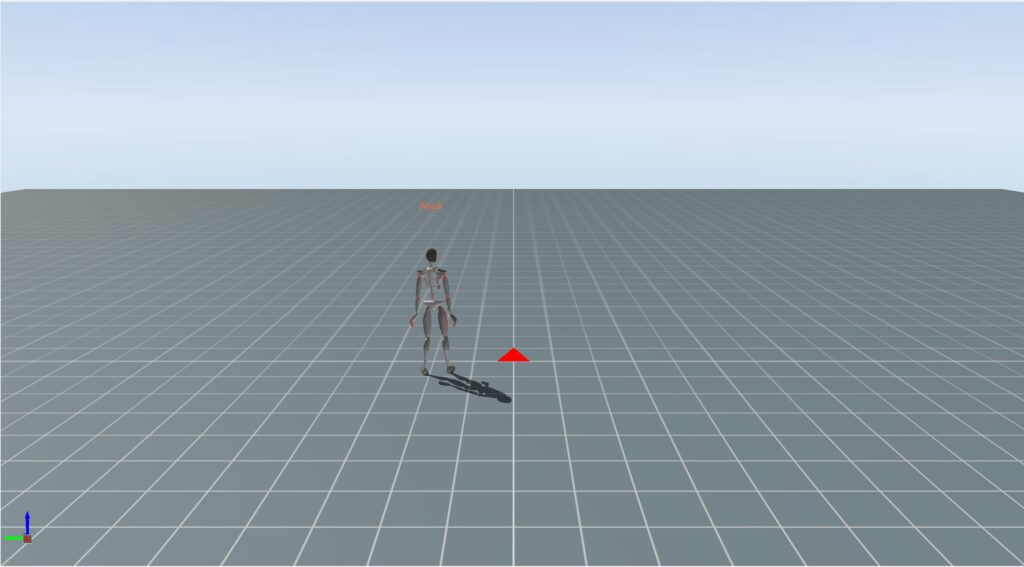

Over time, if the player moves even a few steps in any direction, the offset changes (Figure 2 and Figure 3). This is because the motion-tracking sensors are designed to be orientation sensors. This makes the position estimation highly inaccurate due to integration drift(How Do I Calculate Position and/or Velocity from Acceleration and How about Integration Drift?, 1/11/2022).

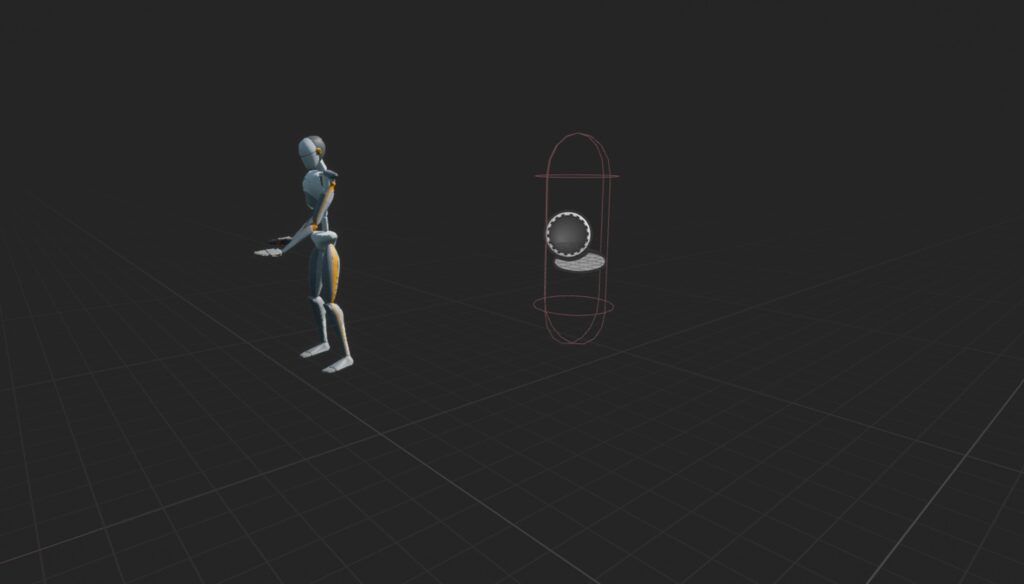

This workflow makes the player movement unreliable. Without any workarounds, the player must remain in the same place for the entire game. Another problem is the fact that MVN Animate sends data to the Skeletal Mesh. This makes the Mesh have an offset from the parent actor (Figure 4). This means that any collision or position related functionality must be done through the skeletal mesh, specifically through the root of the skeletal mesh.

Moving the actor to the position of the root bone will result in an endless movement (Figure 5). This is because the offset received from MVN Animate is relative to the parent actor.

The root bone for the Xsens system is the Hips or Pelvis bone. This means that the relative location and rotation of the character are stored in this bone. The Hips bone is the highest in the skeleton hierarchy. This means that every change in the Hips bone will change the configuration of all the bones. In this sense, if the Hips bone is not updated with the data from MVN Animate Pro, the Skeletal Mesh will remain in place, though the skeleton itself will be inaccurate (Figure 6, Figure 7).

VR Integration

A VR camera must be placed in the head of the character. The position of the Skeletal Mesh and the position of the VR camera will be different every time the game is played due to the offset received from the motion capture suit. This is because the VR camera should remain static in the character blueprint, while the Skeletal Mesh moves.

The VR camera cannot be attached to a head socket in the skeleton. This is because the VR system will always try to override the rotation of the camera while attaching a camera to a head socket will alter the rotation as well. Both rotations can make the camera behave undesirably and can even interpolate the rotations differently every time the game is played.

To sum up there are three problems that the offset received from MVN Animate Pro that need solutions:

- Find a way to move the actor, without moving the skeletal mesh or interrupting the motion capture

- Find a way to constrain the Hips bone position without disturbing the position and rotation of other bones.

- Find a way to synchronize a VR camera with the position of the head while the motion is tracked, without altering the rotation received from the VR headset

Solutions

Solution 1 – Calibrating the hardware before playing.

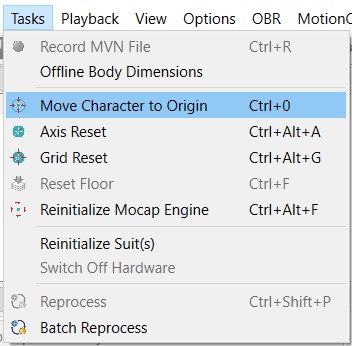

The accuracy of the MVN system position-wise is highly dependent on how the system is set up. When performing perfect measurements in combination with a perfect N-Pose calibration, the inaccuracy should be around 1% of the travelled distance. This could be fine when the game is short and does not involve a lot of walking around. This can partly be overcome by constantly resetting the position in MVN (Figure 8). The Axis might also have to be reset based on the orientation of the character. There is one character blueprint in which all functionality happens.

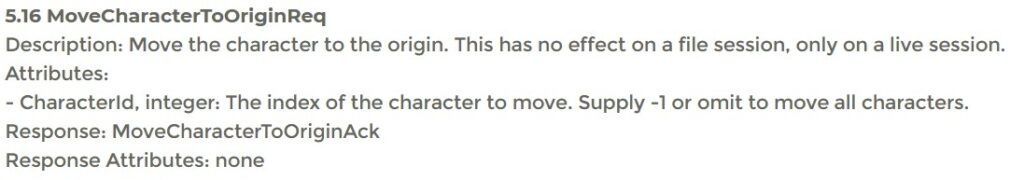

Additionally, a UDP command could be called when the game starts. The command will be received by MVN and will execute the command to move the character to the origin (Figure 9) (UDP Remote Control, 1/31/2022).

VR Integration

This solution does not solve the synchronization of the camera and the Skeletal Mesh positions. It is possible to get acceptable results if both the suit and the VR headset are calibrated in the same place. Both the suit and the VR headset have position tracking and it is possible to semi-synchronize the positions. This solution could be viable if the character is static. The VR camera has accurate world position tracking while the suit does not. This means that over time the offset of the suit will be modified, thus desynchronizing the two positions.

Overall: This solution is viable for a static player or a player with limited movement. The VR integration is possible with acceptable results.

Solution 2 – Simulating Root Motion for Live Link

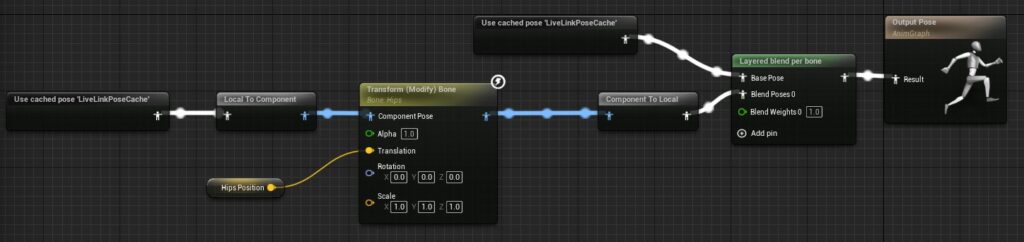

This solution involves the use of two character blueprints: a player character and a proxy character. Each character will have its own animation blueprints. The proxy character animation blueprint will have the live link pose node to receive the information from the Xsens software. The player character animation blueprint will constrain the Hips bone. This will keep the player character in place while not interrupting the motion capture (Figure 10).

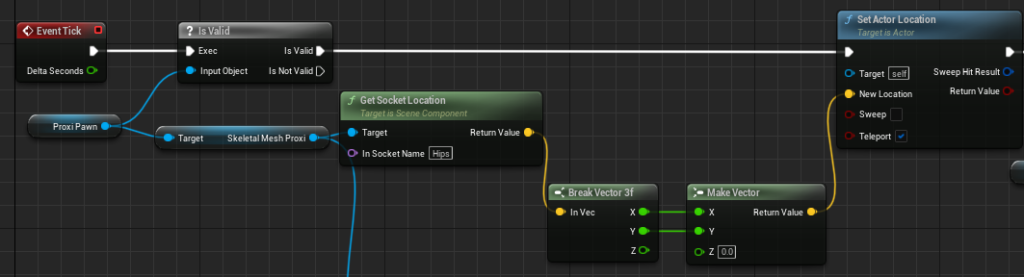

The other bones of the player character’s skeleton will have wrong positions and rotations. To fix this, the position of the player character will be updated with the position of the Hips bone of the proxy character (Figure 11).

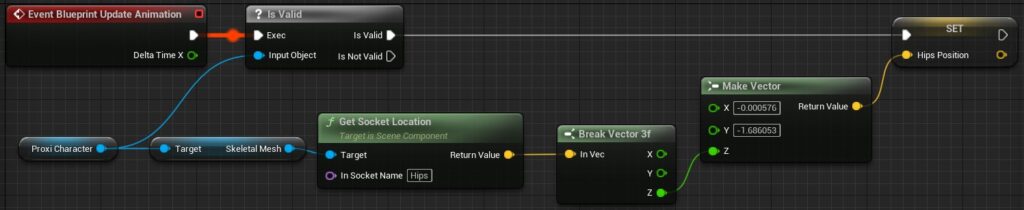

The last issue left is the Z position of the Hips bone. With the setup so far, the player character cannot squat or crouch. To fix this issue, a hips position variable will be made in the animation blueprint of the player character. This variable is updated every tick with the Z position of the Hips bone of the proxy character and the X and Y position of the original transform of the bone (Figure 12).

VR Integration

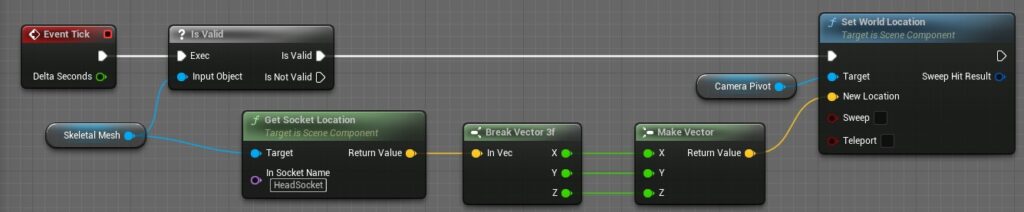

The VR integration in this case is based on the proxy character as well. The camera is put under a pivot, to make the movement separate from the input received from the VR headset. The pivot is updated every tick with the position of the Head bone of the player character (Figure 13). To add any offset, a custom socket attached to the Head bone must be made. This ensures a set offset which is not influenced by the orientation of the character. The rotation and orientation of the camera are set by the VR headset and the Skeletal Mesh used should have the head invisible to prevent any clipping.

An alternative to this setup is the use of the No Level scenario. This is an option enabled in MVN Animate Pro, however, will not give any control over the Z axis.

Overall: This solution simulates root motion. In this case, the entire actor moves with the motion capture and the VR camera is synchronized with the head of the character. This solution does not take into consideration the changing offset during gameplay, making it viable for a game with a static player or a player with small movement.

Solution 3 – Tracking position with an HTC Vive Tracker.

MVN Animate Pro can link to an HTC Vive Tracker to record the accurate world position. This is done through object tracking and requires HTC Vive setup and hardware. By aligning the MVN and the SteamVR coordinated system, the position can be tracked (Object Tracking: HTC Vive, 1/17/2022).

VR Integration

The setup requires the use of an HTC Vive set. The VR camera can easily be integrated after aligning the positions of the coordinate systems.

Overall: This solution records accurate world position. The downside is that different hardware must be used to achieve the results. Additionally, the solution might not work with any other VR sets other than an HTC Vive set.

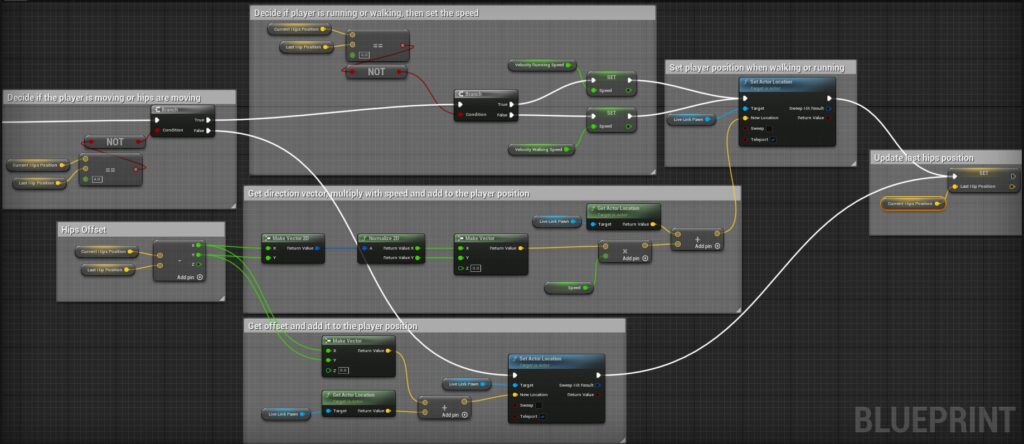

Solution 4 – Using a proxy character to fully control the movement

This solution adds to the second solution. Instead of updating the character’s position with the unreliable position for the MVN system, the proxy character can decide if the user has moved by comparing the Hips position between two frames. If the hips position has moved then the character is moved with a constant speed in the direction of the hips movement. Based on the distance between the hips at different times, it can be determined if the user is moving their hips while staying in place, walking or running (Figure 14).

VR Integration

The VR integration has the same workflow as the one described in the second solution.

Overall: This solution provides the most improvement without using additional hardware. The walking and running speeds can desynchronize based on the user’s walking and running speeds. To solve this, the speed would have to be calibrated at the beginning of the game.

An additional solution for VR only

The position of a VR headset (E.g., Quest 2 headset) can be used. VR goggles have positioning, which could be used the take over the position that MVN provides and just take the orientations of the limbs. This solution is only viable for VR games and will not work on a game played on screen.

Conclusion

All solutions provide a level of success depending on what kind of camera and movement is required for the game. For a VR solution, the camera pivot position must be updated with the position of the Head bone. For minimizing the offset received by MVN, the fourth solution provides the most opportunities for improvement without any additional hardware. If an HTC Vive set with trackers is available, solution three will provide the most accurate results.

References

Movella | Bringing Meaning To Movement. (n.d.). Retrieved September 15, 2022, from https://www.movella.com/

How do I calculate position and/or velocity from acceleration and how about integration drift? (1/11/2022). Retrieved January 11, 2023, from https://base.xsens.com/s/article/How-do-I-calculate-position-and-or-velocity-from-acceleration-and-how-about-integration-drift?language=en_US

UDP Remote Control. (1/31/2022). Retrieved January 11, 2023, from https://base.xsens.com/s/article/UDP-Remote-Control?language=en_US

Object Tracking: HTC Vive. (1/17/2022). Retrieved January 11, 2023, from https://movella.force.com/XsensKnowledgebase/s/article/Object-Tracking-HTC-Vive-1605786850124?language=en_US